|

| Figure 1: Quantum parallelism |

The answer, of course, can be determined by pressing each button to see what it does. And it is necessary to press both buttons - pressing just one button won't convey enough information to determine the answer.

|

| Figure 2: Black box function |

1: // constant bit definitions

2: bitButtonIndex { A = 0, B = 1 }

3: bitColorIndex { red = 0, green = 1 }

4:

5: // black box function declaration

6: f(input bitButtonIndex) output bitColorIndex;

7:

8: bitColorForButtonA = f(A);

9: bitColorForButtonB = f(B);

10: if (bitColorForButtonA == bitColorForButtonB) then

11: bitButtonsShowTheSameColor = yes;

12: else

13: bitButtonsShowTheSameColor = no;

1: // quantum black box function declaration

2: Uf(input/output qubitX, qubitY); // |x,y> -> |x,y⊕f(x)>

3:

4: // variable declarations

5: qubit x; // x holds button or (later) color information6: qubit y; // y facilitates use of the black box function

7:

8: x = |0>; // prepare x in a known state

9: H(x); // transform x to |+>, i.e., 1/√2(|0> + |1>)

10:11: y = |0>; // prepare y in a known state

12: X(y); // flip state of y to |1>

13: H(y); // transform y to |->, i.e., 1/√2(|0> - |1>)

14:

15: Uf(x, y); // transform x to |+> or |->

16. H(x); // transform x from |+> to |0> or from |-> to |1>

17:

18: bitMeasurementResult = M(x); // measure the value of x19: if (bitMeasurementResult == 0) then

20: bitButtonsShowTheSameColor = yes;

21: else

22: bitButtonsShowTheSameColor = no;

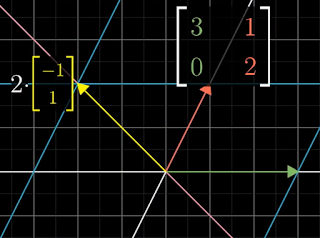

Note that the quantum black box function only needs to be called once to determine whether the buttons show the same color. While the classical function only processes a single specific button at one time, the quantum function can process both button indexes simultaneously. This is represented by the (superposed) state of x as 1/√2(0> + |1>) prior to the call to Uf, where 0 and 1 have the same meaning as per the classical algorithm (i.e., as button indexes for A and B respectively).[1]

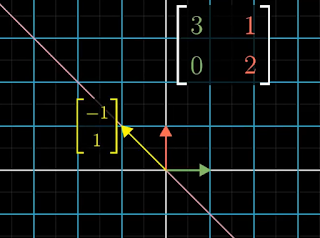

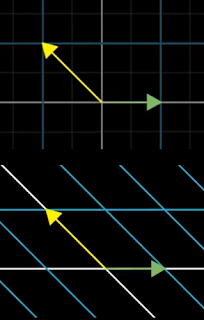

Before going into the details of the above code, this algorithm (called Deutsch's Algorithm) is probably the simplest example demonstrating the parallelism of quantum computing - the ability to evaluate all possible inputs to a function simultaneously. Qubits can also be combined to exponentially increase the parallelism. For example, Figure 1 shows a single four-bit input that is processed by a classical computer to produce a single four-bit output. Compare that to the four-qubit input that allows up to 24, or 16, superposed values to be input and then simultaneously processed by a quantum computer to produce 16 superposed values as output.

In fact, this is what the above algorithm is able to do. Note that unlike the classical algorithm, the black box function does not return the colors associated with the specific buttons. Instead, the function returns a result that represents the relative difference in the button colors (if any), whatever those colors happen to be.

|

| Figure 3: Quantum circuit for Deutsch's Algorithm |

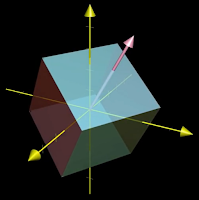

Per line 9, input x is in state 1/√2(|0> + |1>), representing a superposition of pressing button A and pressing button B. Recall that 0 represents button A and 1 represents button B. If button A glows green, a 180° phase change is applied to state |0>. Similarly, if button B glows green, a 180° phase change is applied to state |1>. This can be expressed by the following formula (derived in [2]) where f is the original classical black box function:

1/√2((-1)f(0)|0> + (-1)f(1)|1>)

This reduces to one of the four output states in the following table which can then be changed back to the computational basis state with a Hadamard transform. Note that the measurement ignores the global phase (i.e., the leading minus signs).

Btn A Btn B | Same

f(0) f(1) | Output x Hadamard Measure color?

---------------+---------------------------------------------

red:0 red:0 | 1/√2(|0> + |1>) |0> 0 yes

grn:1 grn:1 | -1/√2(|0> + |1>) -|0> 0 yes

red:0 grn:1 | 1/√2(|0> - |1>) |1> 1 no

grn:1 red:0 | -1/√2(|0> - |1>) -|1> 1 no

Note that the phase change formula and the color results are a mathematical representation of what is occurring within the quantum black box function. While we can measure the output qubit, we cannot access the specific button color information (which is encoded in the phase of the states). Nonetheless the information we can access is sufficient to determine whether the colors are the same.

|

| Figure 4: MZI with a glass sample representing the color green when button A is pressed |

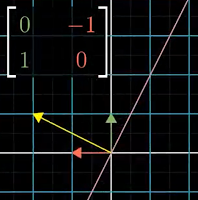

The effect of placing a sample on just one of the paths is that the interference at the final beam splitter will result in the photon always ending up at detector 2. Placing a sample on both paths means the photon will accumulate identical phase changes on each path which cancel out. So the effect is that the photon will always end up at detector 1 just as it would when there are no samples present. The glass samples thus implement the exclusive or (xor) operation in our quantum black box. While we are not able to determine the specific colors of the buttons (without investigating inside the black box), we can determine whether the colors are the same or not by observing which detector the photon arrives at.

References:

- Blog post on visualizing qubits

- Blog post on modeling quantum interference

- Quantum Algorithm with an example - Jonathan Hui

- Lecture on the Deutsch Algorithm - Scott Aaronson

- Q#: A Quantum Programming Language by Microsoft

- Simulating a Quantum Computer with a Mach-Zehnder Interferometer - Frank Rioux

"Suppose you are asked if two pieces of glass are the same thickness. The conventional thing to do is to measure the thickness of each piece of glass and then compare the results. As David Deutsch pointed out this is overkill. You were asked only if they were the same thickness, but you made two measurements to answer that question, when in fact it can be done with one." - Frank Rioux

--

[1] The coefficient of 1/√2 is the probability amplitude which, when squared, gives the probability of measuring the associated state. In this case, there is a 1/2 probability of measuring 0 and a 1/2 probability of measuring 1.

[2] The quantum black box function maps |x,y> to |x,y⊕f(x)) where ⊕ symbolizes xor. The input qubits to the function are:

|x> = 1/√2(|0> + |1>)

|y> = 1/√2(|0> - |1>)

Combining the two inputs (where ⊗ symbolizes the tensor product):

= 1/2(|0> + |1>)(|0> - |1>)

= 1/2(|0>(|0> - |1>) +

|1>(|0> - |1>))

Substituting y⊕f(x) for y:

|x,y⊕f(x)> = 1/2(|0>(|0⊕f(0)> - |1⊕f(0)>) +

|1>(|0⊕f(1)> - |1⊕f(1)>))

When f(x) = 0 (indicating red), the state remains unchanged. When f(x) = 1 (indicating green), the sign is flipped. This effect on the phase can be represented as (-1)f(x) giving (-1)0 = 1 and (-1)1 = -1 respectively. Continuing:

|1>((-1)f(1)|0> - (-1)f(1)|1>))

= 1/2((-1)f(0)|0>(|0> - |1>) +

(-1)f(1)|1>(|0> - |1>))

= 1/2((-1)f(0)|0> + (-1)f(1)|1>)(|0> - |1>)

= 1/√2((-1)f(0)|0> + (-1)f(1)|1>) ⊗ 1/√2(|0> - |1>)

So the separate output qubits of the quantum black box function are:

|y> = 1/√2(|0> - |1>)

Note: The reversible xor operation is implemented using a CNOT gate. When applied in the computational basis { |0>,|1> }, x is the control qubit and y is the target qubit. However when applied in the Hadamard basis { |+>,|-> } as above, y becomes the control qubit and x becomes the target qubit, as explained here.